My Research Projects

Prompt templates from Google Docs

- Translation

- Quiz Generation

- Create a vocabulary list

- Translate into easy English

- Mollick and Mollick: Use LLMs as a language consultant

"Festival of Learning" from MIT News: MIT faculty, instructors, students experiment with generative AI in teaching and learning

Watch the video! (The event took place in January, 2024. The article was published on April 29, 2024.)

ChatGPT, Prompt Engineering, and Language Instruction (July, 2023)

This website (Google Site) provide my workshop slides an sample prompt templates. These are for Japanese language teachers and the slide recording is done in Japanese.

Various Sample Prompts for Language Teachers

- Classification task: This ACTFL-rubric prompt classifies students' essays based on the ACTFL rubrics.

- Easy Japanese: This easy-Japanese prompt translates difficult text(s) into easy ones. (Prompt is in English and the text is Japanese)

- Easy Chinese: This easy-Chinese prompt translates difficut Chinese text(s) into easy ones.

- Emulate Human-like Feedback: This emulate-human-like feedback prompt tries to do an error analysis of student's writing. It provides: corrected sentencence and feedback from GPT. Prompt is in English and the error sentences are Japanese.)

- Multiple-choice Reading Comprehension Quiz Prompt: This reading comprehension quiz prompt generates a multiple-choice reading comprehension quiz from a given text.

- Dialogue Generation Prompt: This dialogue generation prompt generates dialogues using given vocabularies with specific styles.

- Vocabulary List Creator Prompt: This voc-list prompt creats a vocabulary list from a newspaper article (Yomiuri-newspaper).

- Kanji Reading Quiz Prompt: This kanji reading quiz prompt generates a multiple-choice kanji reading quiz based on a given text.

Spring 2022 (UROP students: Stephen Wilson, Victor Luo, and Kenny Chen, “Development of a Multi-lingual Chatbot as a Pedagogical Approach to Language Education."

AI Chatbot: User inputs are translated to English with DeepL, OpenAI's GPT-3 produces an AI response, and then the response is translated from English with DeepL. This model speaks very naturally, but some information may be lost in translation, and outputs are often too difficult for language learners.

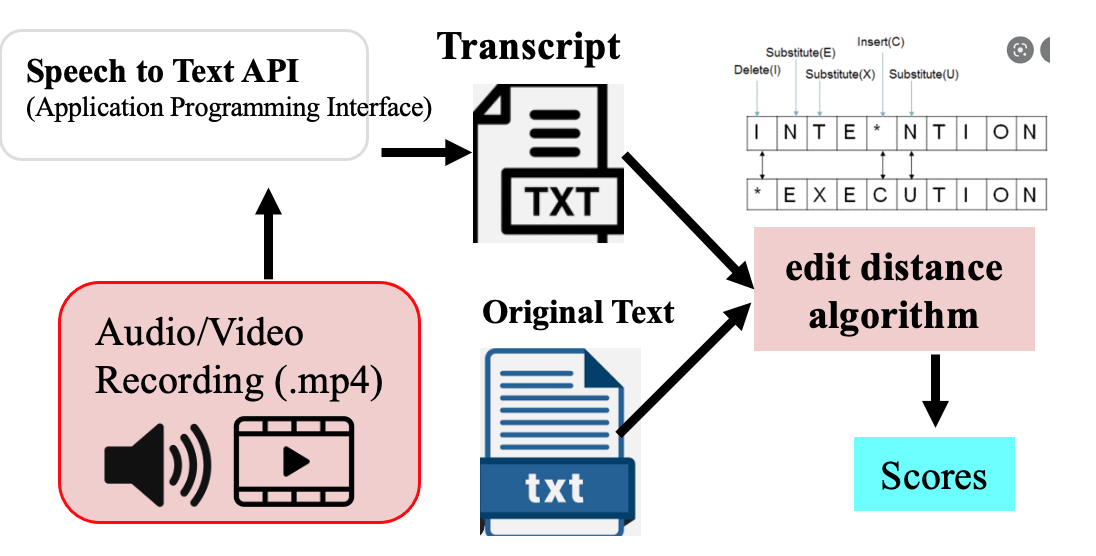

Spring 2022~Fall 2022 with Feina Niu (UROP), "Automated Japanese Reading Assessment". The goal of this project is to develop a tool that can automatically assess student's read-alound ability.

This research was presented at Language Assessment Research Conference (LARC), University of Chicago, September 15, 2022.

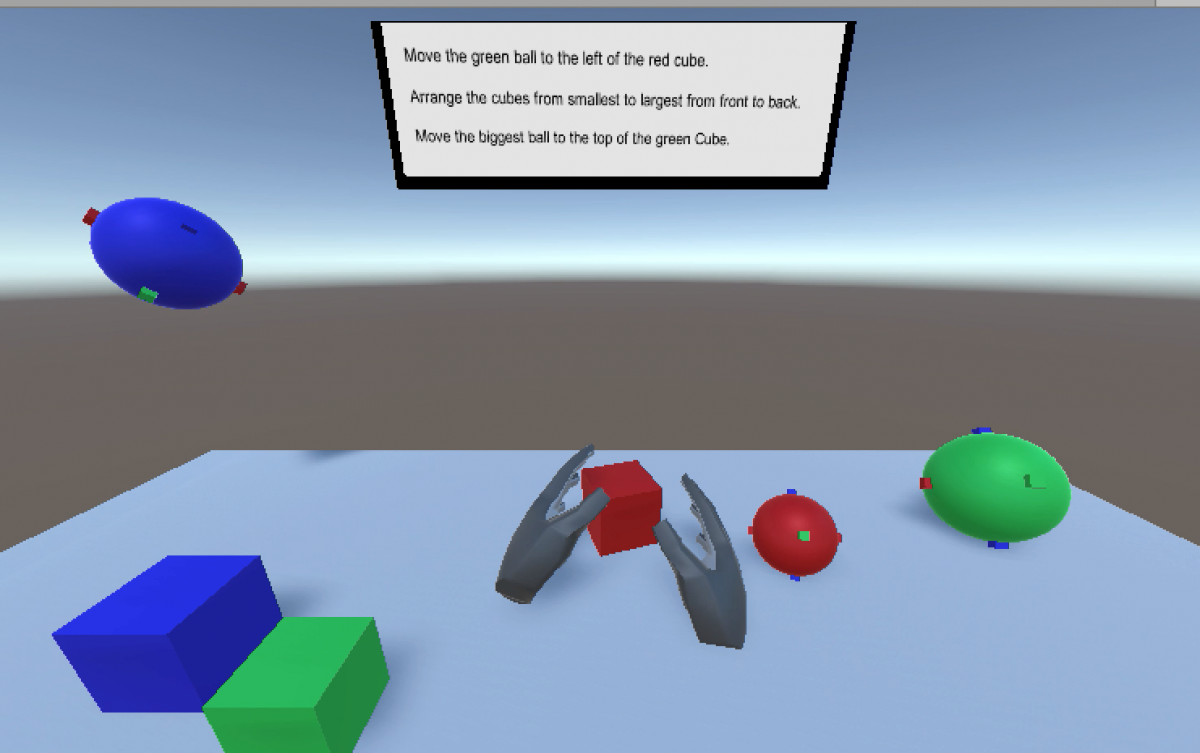

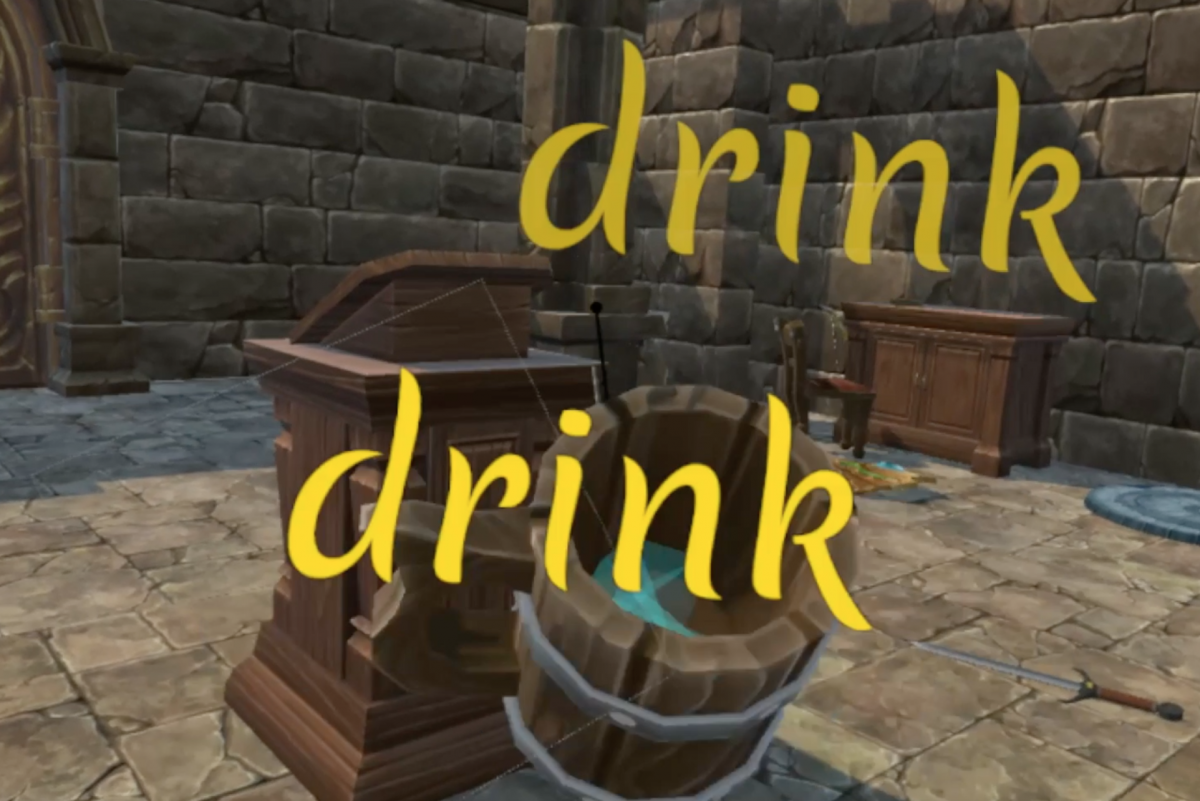

Summer 2021 (UROP student: Austin White): VR-Chat for Language Learning

Watch the video!

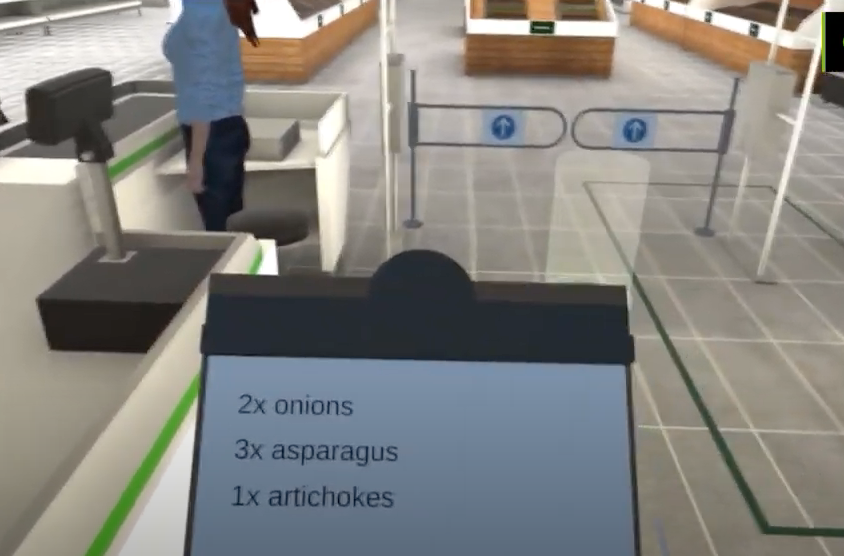

We explored the potential use of VR-Chat for language learning. We developed the language learning hub that consists of five rooms: (i) Writing Room; (ii) Theater Room; (iii) Touch and Listen Room; (iv) City Room and (v) Restaurant Room. Using your avatar, you can interact with your friend(s) in these rooms to practice a foreign language!

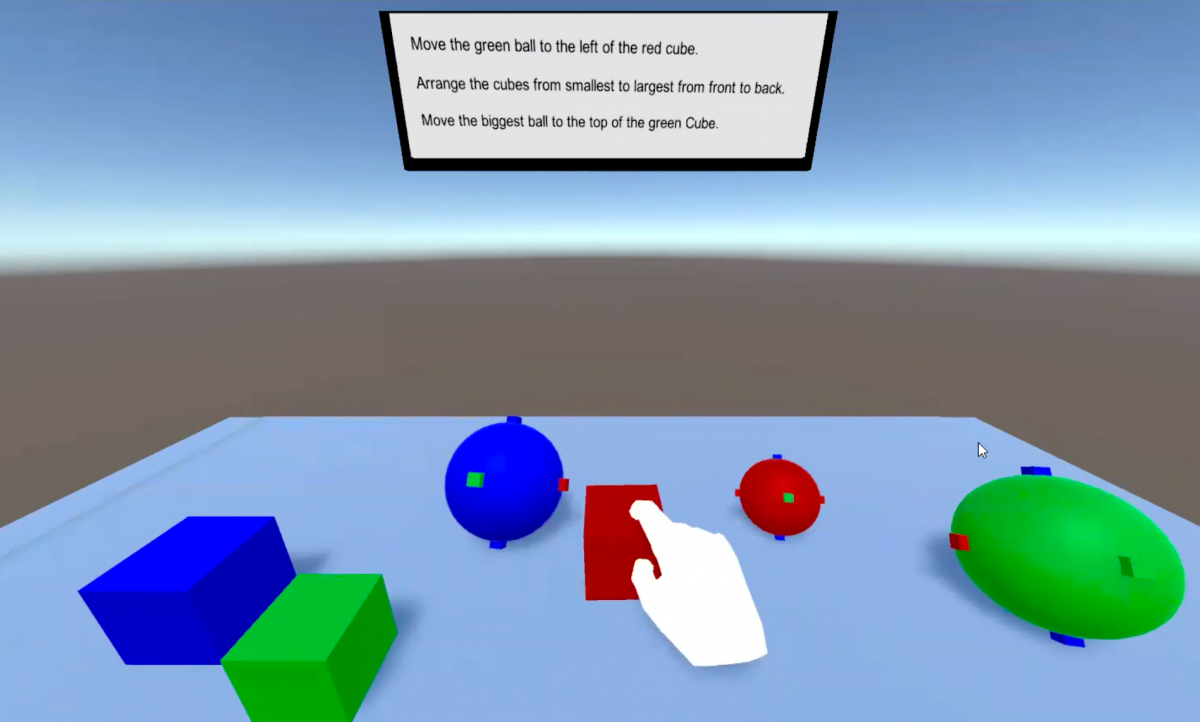

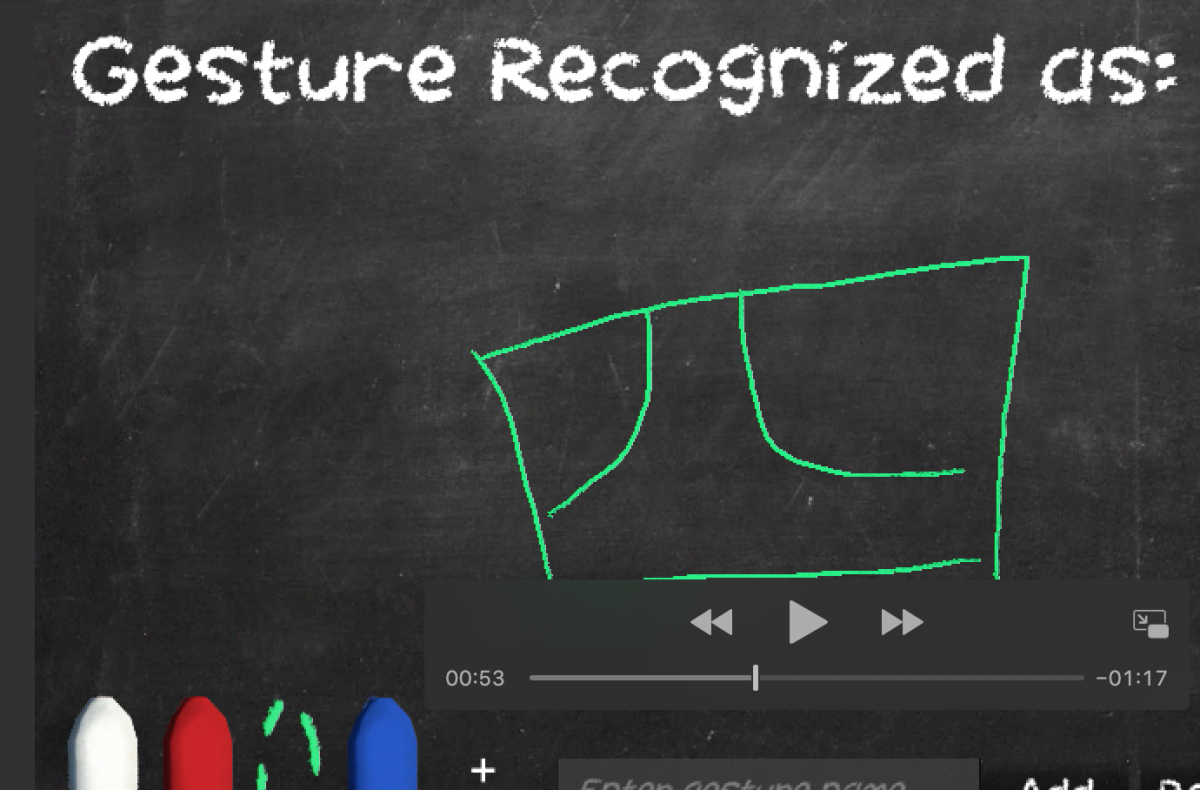

Fall 2020 UROP Project, VR with Leap (UROP students: Qiuyue Liu, Mariela M Perez-Cabarcas, and Daniel Hu)

We developed VR-based language learning applications using the Leap motion. Here are some of the applications we've developed.

| Developed by Qiuyue Liu |

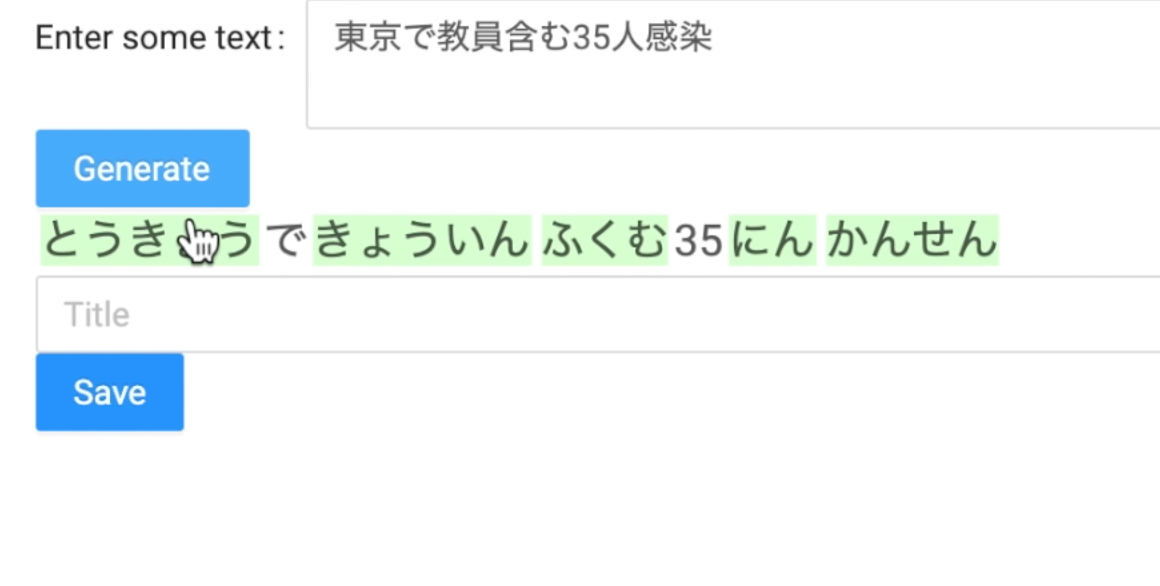

Summer 2020, Special Projects using NLP

| Automatic Kanji Quiz Maker (in collaboration with Cory Lynch; thanks to the generous support from Professor Richard Samuels, Ford International Professor Professor of Political Science, Director, Center for International Studies, MIT) | AI Japanese Language Tutor with Google's autoML (in collaboration with Alex Kimn; Yiqun Agnes Hu, and Dr. Tetsuro Takahashi from Fujitsu Research Lab) | Japanese Grammar Error Maker (in collaboration with Alex Kimn) |

|

|

(Image is coming soon) |

VR for Language Learning (Summer, 2020) UROP projects (in collaboration with Qiuyue Liu & Mariela M Perez-Cabarcas)

Kitchen Demo with Lean and Text-to-Speech (watch this video crip!)

| Writing Board with N$ | Moving 3D objects with Leap | |

|

|

VR for Language Learning (Spring, 2020) UROP projects

Kanji Game by Cory Lynch Grocery Store by Daniel Dangond

Kanji Playground by Alice Nguyen

VR for Language Learning: “Let's Explore Various Types of ‘Interactivity’ in VR” (In collaboration with MIT's UROP student Daniel Dangond)

This project started in the spring semester of 2018 as a UROP project. We are currently developing a VR-based language learning application using Google's Dialogflow and IBM's speech recognition APIs. We plan to continue working on this project for AY2019-2020.

Version 2 (UROP students, Daniel Dangond, Cory Lynch, and Joonho Ko)

Also, see the 360 footage of Kurashiki (Okayama, Japan) below.

.

.

Watch the alpha version of our project video. See the entire 360 view of Kurashiki, using Google Poly.

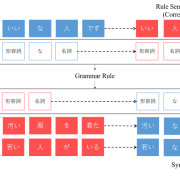

AI Tutor with DeepLearning [2nd Generation] (*This is currently a project at MIT: In collaboration with Alex Kimn and Tetsuro Takahashi.)

AI Tutor is designed to automatically detect grammatical errors that learners of Japanese would make and suggest appropriate correction(s) as feedback in real-time. This task of grammar error correction (GEC) is challenging in that it is extremely hard (if not impossible) to predict the types of errors that learners would make. However, if we can let AI Tutor do GEC properly, it would benefit both learners and teachers greatly. For learners, if they can get feedback in real-time as they write sentences, language learning will become more interactive and effective. For teachers, it would save time as AI Tutor could correct learners’ errors on behalf of teachers. Thus, AI Tutor is instrumental for teachers as well as learners.

We are currently in the process of incorporating neural networks into AI Tutor's system. In so doing, we plan to investigate the following two research questions:

- How can we increase the size of our training data (i.e., error – corrected parallel data)?

- What type of neural networks would work best for Japanese GEC and why?

Natural language processing techniques (with the help of human linguistic knowledge) will be used to tackle the first question (see Figure 1). To tackle the second question, we will start with building convolutional neural networks (see Figure 2). To the best of our knowledge, no other projects attempt to tackle Japanese GEC using NLP and ML, and thus our project can shed light on the potential of ML for language learning and teaching.

Figure 1: Synthesizing Training Data with NLP Figure 2: Convolutional Neural Networks for AI Tutor

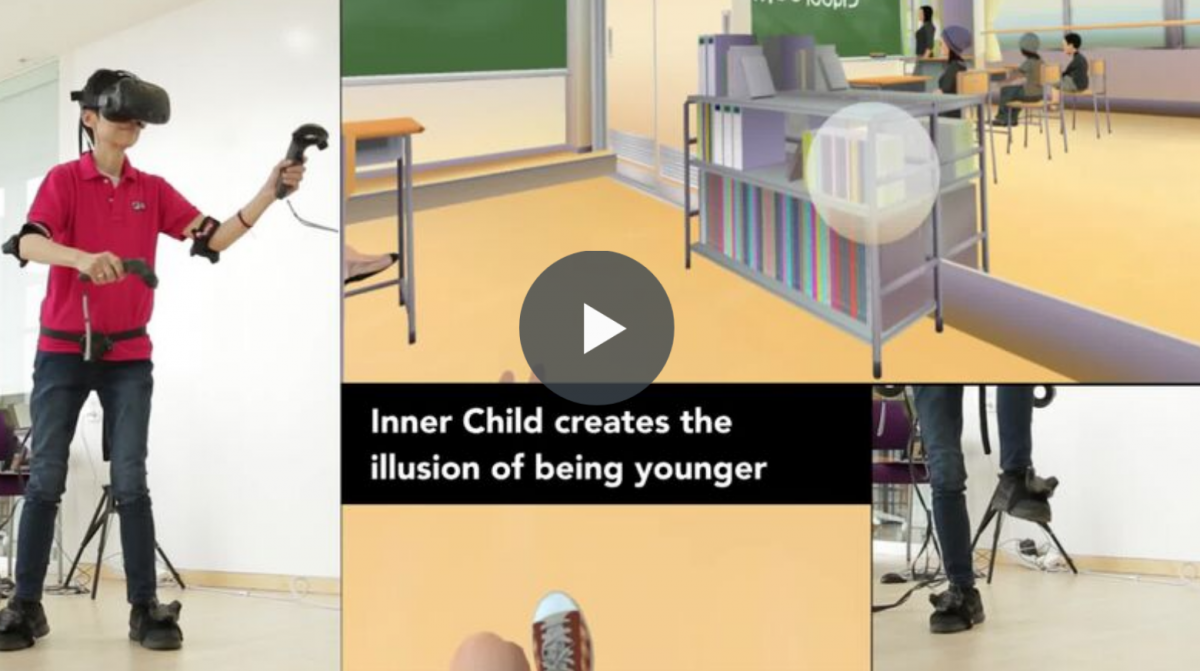

Inner Child (in collaboration with Christian David Vázquez Machado from the Fluid Interfaces Group at the Media Lab & Kanda University of International Studies, Japan)

- The results show that participants in the High Embodiment group performed significantly better than those in the Low Embodiment condition, immediately after being exposed to the virtual reality experience.

- The participant’s attitude towards the experience was significantly better for participants in the High Embodiment Group.

- The High Embodiment Group shows a much higher indicator of willingness to participate in a similar learning experience in the future.

- The High Embodiment Group shows significantly higher measures of engagement than the Low Embodiment Group.

Language Learning in Virtual Reality: Words in Motion (in collaboration with Christian David Vázquez Machado from the Fluid Interfaces Group at the Media Lab & Kanda University of International Studies, Japan) Video

Starting from Spring, 2017 ~ Fall, 2017, we investigated how kinesthetic learning can impact vocabulary memorability. To this end, we developed a kinesthetic Virtual Reality platform for the HTC Vive that creates interactable objects and embeds them with a neural net that recognizes movement patterns performed with it. We call it "Words in Motion". We conducted some experiments and showed that kinestetic learning enhanced students' vocabulary memorability.

Figure 1  Figure 2

Figure 2  Video

Video

Figure 3: Actions in the VR Kitchen

Figure 3: Actions in the VR Kitchen

Augmented/Mixed Reality-based Language Learning Application(s) with Serendipitous Learning : WordSense (in collaboration with Christian David Vázquez Machado from the Fluid Interfaces Group at the Media Lab & Kanda University of International Studies, Japan)

During the fall semester of 2016, we worked on the development of language learning applications using Microsoft HoloLens. The project team included: (i) Christian David Vazquez Machado (a graduate student from the Fluid Interfaces Group at the MIT Media Lab); (ii) Louisa Rosenheck (Research Manager) from the Education Arcade, MIT; and (iii) three MIT's undergraduate students. We developed several prototypes using AR/VR technologies. (see the short video clips of these video's below---click the snapshots of the prototypes)

The goal of our project was to explore new language learning experiences that can take advantage of the affordance and the efficacy of AR/VR technologies. Also, we promoted the concept of “serendipitous learning." [Serendipitous learning refers to learning experiences where learners can acquire knowledge through daily activities in everyday contexts; it often takes place accidentally or incidentally, any time and any place. ]

The ACM Paper about Serendipitous Learning.

ObjectRecognition Review Board Sentences Movie Clip

Virtual Reality (VR)/Mixed Reality (MR)-based Language Learning Application(s)

Many studies have shown that virtual reality (VR)/mixed reality (MR) technologies can provide teachers and students with unique learning environments and that VR/MR-based learning experiences are engaging and effective. These technologies can take a group of students to almost any environment imaginable. Learning experiences in those environments are highly contextual and realistic, sparking questions and conversations, and therefore they have great potential for language learning.

During the period of Fall 2015~Spring 2016, we developed a VR-based language learning application using the platform developed by the Fluid Interface Group at the Media Lab (Greenwald, et. al 2015).

The development of this application was done by Remy Mock; Scott Greenwald was his technical supervisor. To see some of the features of this application, watch this video.

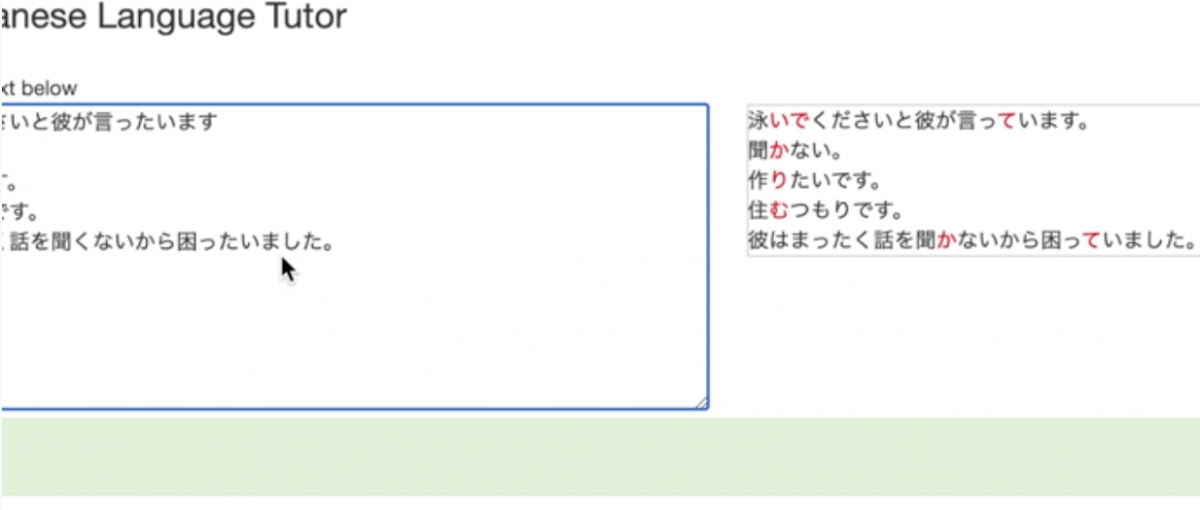

[1st Generation] AI Tutor (SuperUROP at MIT: Alex Kimn and Volunteer UROP: volunteer UROP at MIT: Brandon A Perez )

The AI Teacher is an innovative online language learning tool for learners of Japanese (especially, for K-12 students or AP exam takers). The AI Japanese Teacher has the following characteristics:

- It is "interactive" in that the AI Teacher can catch user’s mistake(s) automatically as s/he types a sentence;

- It is "instructive” in that the AI Teacher can give users “just-in-time” feedback for the mistakes they made;

- It utilizes corpus data (currently, Aozora-bunko) to enhance user's learning process; and

- Its Japanese knowledge comes from Japanese language teachers via teachersourcing

This is a collaboration work with Dr. Tetsuro Takahashi (from Fujitsu Lab.), and we recently received a research grant from the Japanese Foundation, Los Angeles. Some of the functionalities of the AI Teacher can be seen through this video (in Japanese).

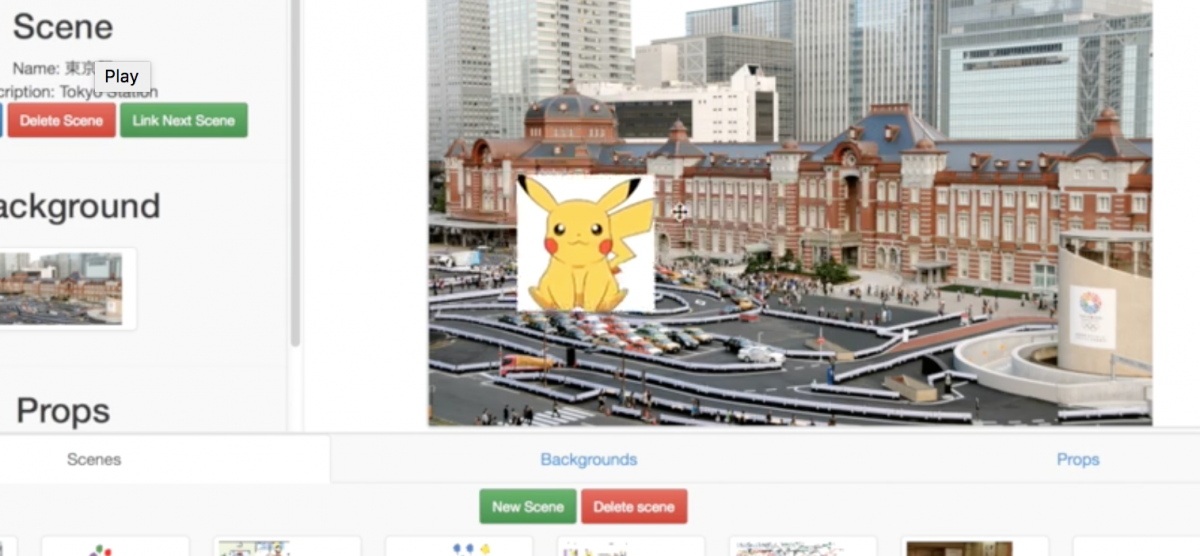

JaJan

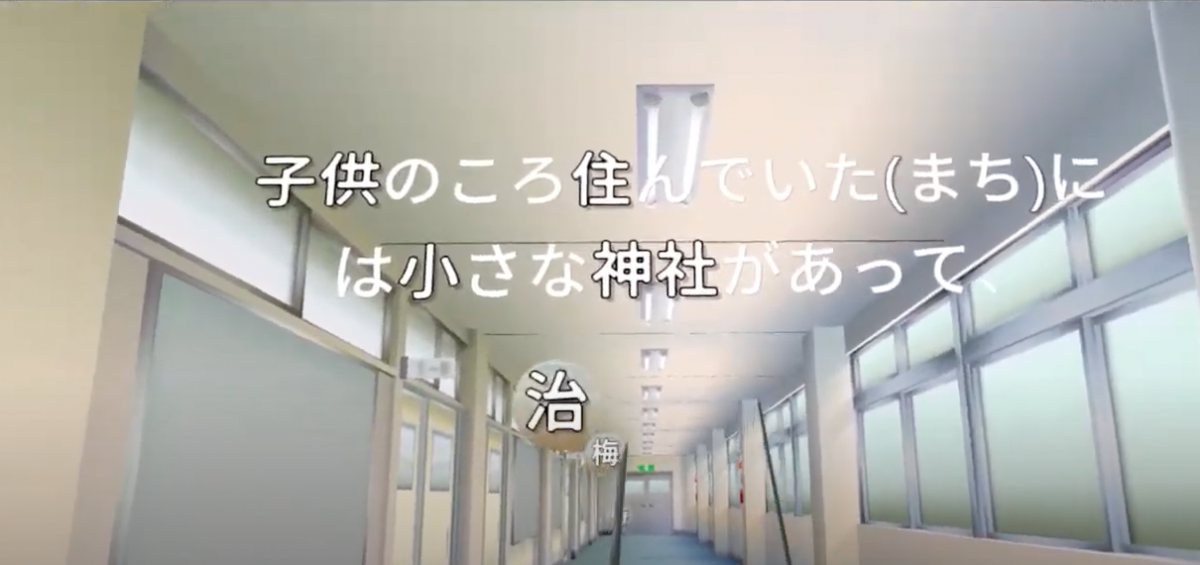

JaJan is a state-of-the-art language learning application that can provide a virtual study-abroad experience. The platform was originally developed by the Fluid Interface Group at MIT Media Lab. The project is the collaborative work of the department of Global Studies and Languages (GSL) at MIT, the Media Lab, and the Kanda University of International Studies, Japan, and it is being funded by the Sano Educational Foundation, Japan.

JaJan's Scenes and Features

Object Movement Video Background Linking Scenes Scene Maker

We are confident that JaJan will bring profound changes to the ways we experience language learning and it can make a great contribution to the field of language education.

Our ultimate objective is practical; we will extend JaJan! to include network technologies that will enable it to be used in real classrooms and integrated into existing language curricula. We are confident that JaJan! will be an innovative language learning environment for users from all over the world to participate, interact, and collaborate together.

NLP Story Maker (Past)

Story Maker let users illustrate their stories on the fly, as they enter them on the computer in natural language. With the NLP technology developed at Microsoft Research, NLP group, users can feed unrestricted natural language into Story Maker, and Story Maker provides users with direct visual output in response to their natural language input. The tool can potentially impact both the way we interact with computers and the way we compose text.

NLP Story Maker paper is available here. Also, the video of NLP Story Maker is available here courtesy of Michel Pahud at Microsoft Research.